Friday 2/12… For the sake of conversation, I wanted to share some thoughts about forecast variance (i.e., the tendency for forecasts to vary from one meteorologist to another), forecast advancements, and forecast techniques. It goes without saying that atmospheric prediction is *extremely* difficult and time consuming. During an active pattern, a forecast for a 36-hour period can take me up to 4 hours to formulate; if there is any risk of winter weather at all, it can take me 6+ hours. We are all flooded with information these days, and it’s no different for atmospheric scientists.

Atmospheric Models

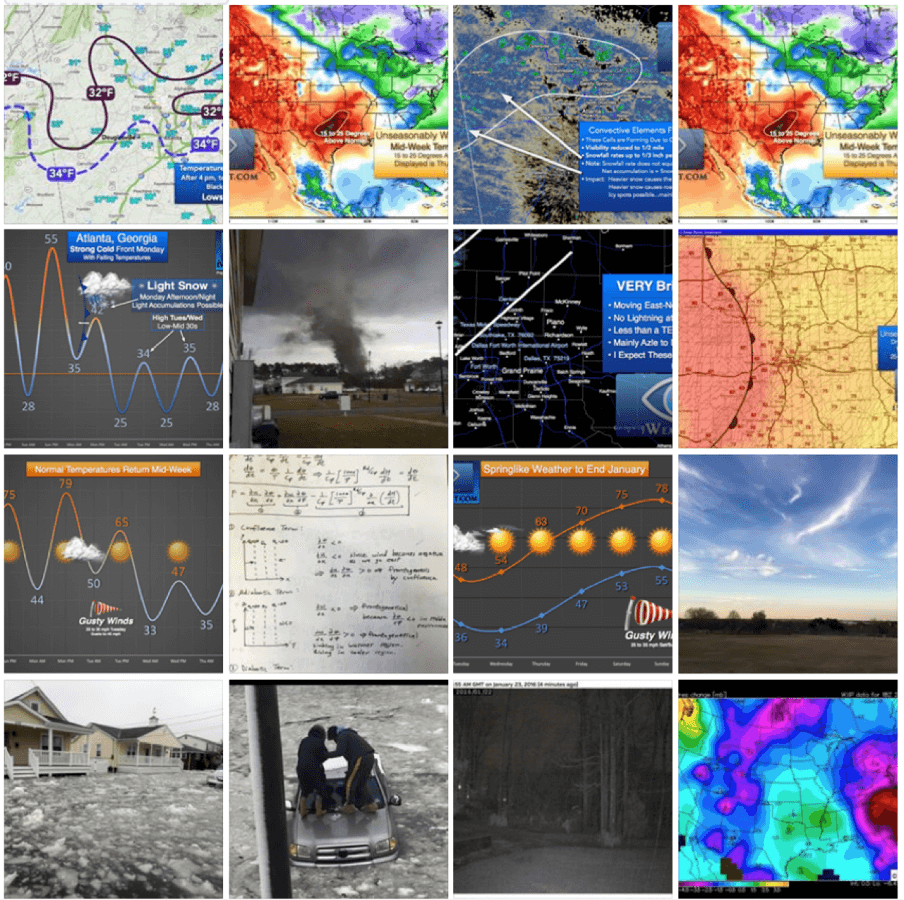

When I was in high school 3 decades ago, there were really only 2 numerical prediction models and they were not very accurate (in retrospect). There’s no telling how many times I got my hopes up for snow and then it didn’t happen. During the 30 years since, an enormous amount of research has led to improved physics, microphysics, fluid dynamics, and parameterizations within the numerical prediction models. An enormous increase in supercomputing power has allowed for significant improvements in the spacial and temporal resolution of our models. NOAA supercomputers make *quadrillions* of calculations every second (Press Release from NOAA) and we now have close to a dozen reliable numerical prediction models, and ensembles thereof, that forecasters are required to analyze bit by bit, layer by layer, and parameter by parameter several times a day to make an accurate forecast.

Contrary to popular myth, computers do not “tell us” what to forecast. To generate a forecast, we have to analyze the individual forcing functions (i.e., the dynamic/physical processes that create the weather), then forecast how those processes evolve and interact with each other via various teleconnections & feedback loops. Finally, we determine the types and magnitudes of intertwined weather phenomena those processes will create. I go to bed some nights with a massive headache from staring at seven large monitors all day, then wake up the next day to start all over again.

Winter Weather Forecasting and Ohhh the Migraines

Winter weather is especially challenging. When we forecast a winter storm, we are predicting something that doesn’t even exist at the time the initial forecasts are made. Again, we are forecasting the behavior of a complex fluid by predicting how the various atmospheric dynamics and other physics will evolve to create vertical motion several days down the road. Vertical motion is the process that leads to condensation, the formation of clouds, and ultimately precipitation. To predict winter weather, we have to then assess the pre-existing airmass, its evolution and physical character, as well as the formation/propagation of new airmasses – all of which may interact with the aforementioned forcing to create winter precipitation. We then have to analyze every layer of the atmosphere from the top down to determine the types of precipitation, all precipitation transitions, the timing of each transition, the amount of accumulation from each precipitation type (assuming multiple transitions), and a multitude of other factors such as precipitation evaporating/sublimating before reaching the ground (virga) or evaporative/sublimative cooling (can cause the temperature to drop very quickly in some situations – many ice storms have formed as a direct result of evaporative cooling).

We also have to consider atmospheric instability and the potential for convection. If you followed my snow forecasts last week, you will recall that I was concerned about the possibility of convective elements that could lead to gusty winds and heavier snow showers (Tuesday 2/9/16). About 5 days in advance, I identified a layer of conditional instability that would develop and likely cause convective snow bursts; that forecast actually worked out very well and is well documented. However, I have to admit that I almost missed that critical signal. In a last-minute round of analyses, I ran the vertical thermodynamic profiles through state-of-the-art software developed for the purpose of analyzing model-simulated soundings (the same software I use to predict severe thunderstorms and heavy rain events), and there it was… conditional instability that would ultimately become the driving force behind the widespread light snow that developed after sunrise (with daytime heating) on Tuesday, producing embedded convective snow bursts and gusty winds.

I am saying all of this because I love to teach and explain the inner-workings of the science, and also to express my utmost respect for any meteorologist who puts their work on public display day after day. Whether right or wrong, they wake up each day and head to work (usually on an exhausting schedule comprised of shift rotations), analyze a mind-boggling amount of data, then publish their analyses and predictions for the public to consume and criticize. Imagine for a second that your work was published every day for each of us to critique; it’s a scary thought for 99% of us and it’s definitely not easy.

I have friends who work at the National Weather Service… folks I went to college with and others that I worked with when I was a forecaster for the NWS. I always worry when our forecasts are misaligned for two reasons:

- I do not like creating public confusion. The meteorological community has worked very hard to improve messaging in pursuit of a weather ready nation (especially in terms of anticipated impacts); and

- I don’t want my dissension to be construed as criticism. Ultimately, if the dynamics/thermodynamics change or if I form a different scientific conclusion for any reason, I will certainly change my forecast.

We all have to forecast what we believe the atmosphere will do days in advance, based on theory/physics, the data, our training, our research, and our unique professional experiences. I do not consider forecasting to be a competition, but I am keenly aware that the public views it that way. A forecast consensus does not equate to accuracy and it certainly doesn’t validate a forecast as “the correct one” before the end result can be observed. Only the final event, in hindsight, allows us to grade the performance of a forecast.

My 30% Confidence Threshold for Mentioning Winter Weather

I’m not one to hedge when it comes to forecasting (a lot of that is my own stubbornness); I try not ride the “slight chance” train although an expression of uncertainty is required under the scientific method. I do my best to forecast with certainty and I do not allow my forecasts to flip flop.

For the past two years (now going into the third year), I have been testing a forecast strategy for predicting *problematic* winter weather (i.e., winter weather that impacts travel, businesses, schools, etc., — not flurries that flutter about). This strategy requires a 30% confidence threshold before I mention winter weather. This is based on the known behavior, limitations, and tendencies of the numerical prediction models, their propensity for changing solutions when more than 96 hours out, and my own scientific/forecasting experiences.

Consider this example… assuming you’re not a professional gambler, would you bet the house on a 30% chance? Statistically and climatologically speaking, my experience has revealed that if I can’t muster up a 30% confidence that an event will happen, it’s not going to happen. This strategy has yielded the following results since I began the experiment during the 2013-14 winter season: false alarms = 0; probability of detection (event occurred) = 100%. This only speaks to the performance in terms of 1) false alarms (crying wolf), and 2) “calling it” when it needs to be called. It does not say anything about the accuracy of internal details (i.e., precise amounts, etc.).

So, I’ve had no false alarms and I’ve predicted 100% of the events that occurred with impacts to businesses, schools, public transportation, aviation, etc., in both North Texas and northern Georgia over the past 60 months. This is a significant step forward because in the era of social media, we have an enormous amount of hype that comes from untrained forecasters and/or forecasters who just get excited and really want it to snow (wishcasting). My point is, there is a method to my madness. Every forecaster has a strategy and I respect all of my professional colleagues who work everyday to put their forecast in the public domain, for your consumption and subjective criticism, all in the interest of public safety.

Follow the Science, Not the Hype

Follow your favorite (credible) sources and be sure to follow the science — not the hype or your hopes for snow (it’s easy to get wrapped up in the excitement and then you find yourself listening only to the forecasters who fuel the hype). The NWS does not hype and I absolutely encourage you guys to follow your local NWS office on Facebook and Twitter, in addition to iWeatherNet. Between the two organizations, you will get the full picture and you will be better prepared because of it.